Web scraping is a powerful technique for extracting data from web pages. While there are many sophisticated tools and libraries available, sometimes the simplest solution is to use your browser’s built-in developer tools. This guide will show you how to scrape data using Chrome’s developer console and XPath selectors, with a practical example of extracting Facebook friend data.

Prerequisites

Before you begin, ensure you have:

- Google Chrome browser installed

- Basic understanding of HTML and JavaScript

- Familiarity with browser developer tools

Basic Web Scraping Steps

Open Developer Tools

- Press

Cmd-Option-ion macOS - Press

Ctrl-Shift-ion Windows/Linux - Or click the vertical … menu > More Tools > Developer Tools

- Press

Navigate to the Console tab

- This is where you’ll run your scraping code

- Make sure you’re on the page containing the data you want to scrape

Use XPath to Select Elements

// Basic XPath selector const result = $x("//div[@class='target-class']"); // Select by attribute const links = $x("//a[@href]"); // Select nested elements const nested = $x("//div[@class='parent']//span[@class='child']");

Practical Example: Scraping Facebook Friends

Navigate to Facebook Friends List

- Go to https://www.facebook.com/friends

- Or click the Friends tab in Facebook

- Scroll slowly to load all friends

Open Developer Tools

- Use keyboard shortcut or menu option

- Ensure you’re on the Console tab

Run the Scraping Code

// Extract friend profile URLs const result = $x( "/html/body/div/div/div/div/div/div/div/div/div/div/div/div/div/div/div/div/div[*]/a/@href" ); // Log the results result.map((x) => { console.log(x); });Save the Results

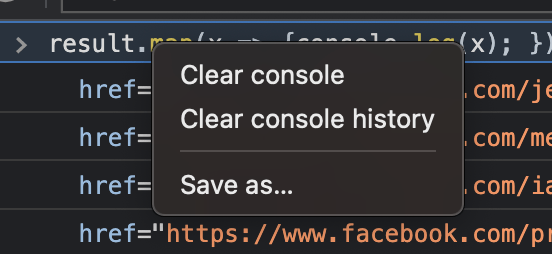

- Right-click the console output

- Select “Save as…”

- Choose a location to save the data

Advanced Scraping Techniques

Using CSS Selectors

// Select elements by class

document.querySelectorAll('.target-class');

// Select elements by ID

document.getElementById('target-id');

// Select multiple elements

document.querySelectorAll('div.target-class, span.target-class');Extracting Specific Data

// Extract text content

const text = $x("//div[@class='content']/text()");

// Extract multiple attributes

const data = $x("//div[@class='item']/@*");

// Extract structured data

const items = $x("//div[@class='item']").map(el => ({

title: el.querySelector('.title').textContent,

link: el.querySelector('a').href

}));Best Practices

Respect Website Policies

- Check robots.txt

- Follow rate limiting

- Don’t overload servers

Error Handling

- Add try-catch blocks

- Validate data

- Handle missing elements

Data Processing

- Clean and format data

- Remove duplicates

- Validate results

Troubleshooting

Common Issues

Elements Not Found

- Check selector syntax

- Verify page structure

- Wait for dynamic content

Console Errors

- Check JavaScript syntax

- Verify XPath expressions

- Handle null values

Data Format Issues

- Clean output data

- Handle special characters

- Format consistently

Comments #